Full API MCP Exposure vs. Specific Workflows

Should you expose your full API through MCP or carefully select specific workflows?

AI agents have too much access to APIs. This is the opinion a few API community members have been sharing. This time it's not about what operations AI agents can execute. Instead, it's about what those agents can do with a combination of operations. Some people defend that AI agents should be given a set of pre-configured workflows instead of opening access to all possible combinations of operations. But, is that approach something you can even call agentic? I mean, if you give an AI agent access to a list of pre-conceived workflows, there's not much agency in what it can do. Right? The whole idea of letting AI figure out how to use API operations was based on the idea that it would autonomously figure out what it could do. I can see there are pros and cons on both sides. Which one is the best approach, then? Stay with me to explore the possibilities.

This article is brought to you with the help of our supporter, n8n.

n8n is the fastest way to plug AI into your own data. Build autonomous, multi-step agents, using any model, including self-hosted. Use over 400 integrations to build powerful AI-native workflows.

Let's start with the thought of exposing your whole API to AI through MCP. What are the pros and cons of doing that? What are the things you should consider before even thinking about doing it? Why should you—or shouldn't you—do it, in the first place? If I were you, I'd treat an AI agent in the same way I would treat a human consumer. I'd follow the same rules for both scenarios. The first thing to think about is the number of API operations you want to expose. Make too many operations available and you risk making your API hard to understand and use. If you expose too few operations, you might leave important possibilities on the table. It's definitely not easy to pick one option or the other. On top of that, you have other factors to reflect upon, such as security. The number of exposed operations, according to security experts, is proportional to the probability of your API being attacked. And that's something you don't want to happen, I presume. I feel like one thing is clear. And that is the number of operations you expose should be low. And those operations should be concise and do one thing really well. If this is what you do, then AI agents can freely decide what API operations to use and how to combine them. If, on the other hand, you're not comfortable handing the decision-making power to AI, then exposing one or more specific workflows might be your choice.

Creating a specific workflow and exposing it through an MCP is a great option if what you want is full control over what AI agents can execute. The choice of offering a workflow leaves no room for interpretation. AI agents will simply parse the workflow definition and execute whatever operations are there, one by one. Another advantage is that there won't be any ambiguity about input parameters for each operation and how operations are chained together. Everything is configured in the workflow definition. However, the higher the number of operations a workflow has, the higher the chances of failure are. And, how do you handle the failure of a multi-step workflow? Should the AI agent retry the operation that triggered the failure? Or, should it give up and share an error message to the end user? These are questions that don't have easy answers, I know. "It depends" can't certainly be the right answer. At least, not for me. That is why some people prefer a third option, which is to encapsulate the chaining of multiple workflow steps into a single exposed API operation.

Exposing a full workflow on a single API operation is the option that gives you the most control. After all, the combination of all the workflow steps is abstracted behind a single API call. AI agents won't even be able to distinguish between operations that expose workflows and ones that don't. Since all the steps are encapsulated, there's no room for different interpretations. And, the decision of what to do in case of failure is made by the API, not by the AI agents. Altogether, it looks like this is the best option. A closer inspection, however, reveals that it also has its drawbacks. One thing to pay attention to is the length in time of the workflow. The longer it takes a workflow to finish, the higher the chances of the AI agent giving up due to a timeout error. Remember that now, from the AI agent’s perspective, your workflow is a single API operation. If it takes too long, it's better to handle it asynchronously. The second situation has to do with workflow failures and reversible operations. In this case, since AI agents don't know that the API operation is a multi-step workflow, all control is left to the API itself. The decision of what to do in case of failure needs to be baked into the API. So, for example, you need to program the number of retries if one step fails. Or, whether or not to try to undo all previous steps. And, if that's the case, to program all undo operations.

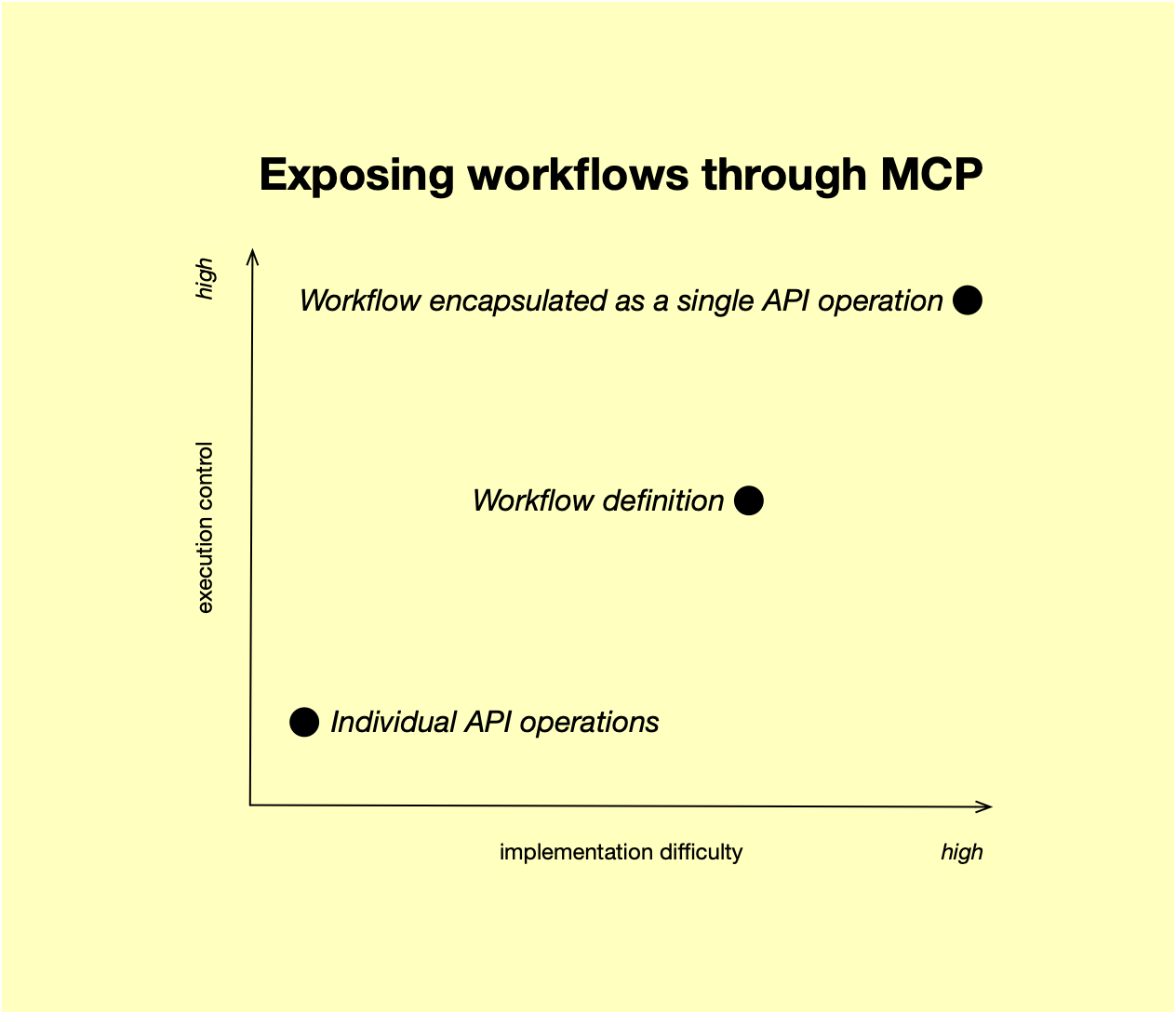

In summary, there is no one-size-fits-all solution. You can see the solution space as a spectrum where the power AI agents have is inversely proportional to how easy it is to implement a multi-step workflow. On one extreme, you have AI agents that make all the decisions about the operations to call on each workflow step. On the other extreme, you have a full multi-step workflow baked into your API and exposed as a single operation. The decision, in the end, is a factor of how much control you need to have against the cost of implementing the final solution.